Originally published on Cprime.

Assessing your system’s reliability through SLOs is a great way to really understand and measure how happy users are with your service(s). Error Budgets give you the amount of reliability you have left before users are unhappy. Ideally, you want to be alerted way before users are dissatisfied and take the appropriate measures to ensure they aren’t. How can you achieve that?

That’s where alerting on SLOs and Error Budget Policies come into the picture. By tracking how happy your users are, through SLOs, and alerting way before their level of insatisfaction reaches critical levels you’ll be able to define policies to deal with issues in a timely manner, ensuring operational excellence

Alert on SLOs

How to Measure System Reliability describes the building blocks of the reliability stack that enables you to measure, assess, and have informed discussions about reliability:

- Service Level Indicators (SLI) represent a quantifiable measure of service reliability

- Service Level Objectives (SLO) define how many times an SLI has to be achieved for users to be happy with your service, within a time interval

- Error Budgets represent the amount of reliability that is left from an SLO

This foundation allows you to measure user satisfaction and opens the door to the creation of meaningful, actionable alerts that accurately track your services’ reliability levels.

SLO thresholds

Traditional alerting practices page engineers with alerts that do not directly track user satisfaction. Being paged at 3 a.m. because a server’s CPU usage has been above 80% for the past 5 minutes is standard procedure. And while this is important information, it’s not worth waking someone up if no users are being impacted.

The concept of alert thresholds can be applied to SLOs. Let’s imagine you defined an SLO that, for a rolling 30-window period, 95% of requests need to be served under 200ms. Once that SLO is breached you have real user impact and that’s worth the trouble of waking someone up. Despite being better than traditional alert thresholds, this approach has a few drawbacks:

- It’s a reactive approach, meaning, you’ll receive an alert only when there’s more than the accepted level of unreliability

- Static thresholds are unable to keep up with your service’s evolution. Thresholds are defined around the conditions of today and can quickly become obsolete. Service changes, hardware upgrades, or library patches can impact the meaningfulness of a threshold

A better approach would be to evolve into a proactive approach, where you could be alerted before reliability levels have already been breached.

Error Budget Burned

Error budget is the amount of reliability you can tolerate before reaching critical reliability levels and should not be treated like another metric to be tracked. An error budget can be leveraged to create alerts that can warn you when something is not working properly way before your SLO target is compromised. Let’s use the previous where you have an SLO that, for a rolling 30-window, 95% of requests need to be served under 200ms. This means that, of all served requests, 5% of them can take longer than 200ms. If all have been served under 200ms, you have all your error budget available (100%). You can then define alerts on the amount of error burned. For example:

- 25% of error budget burned would trigger an email

- 50% of error budget burned would trigger a message to slack

- 75% of error budget burned would trigger a page

Increasing levels of error budget burned would trigger higher level alert mechanisms. Inversely, you could alert on error budget available where, instead of sending alerts on thresholds of error budget burned, you would send on thresholds of error budget left. Both approaches achieve the same result.

This proactive approach allows you to be alerted before serious problems arise but are still limited by its static nature. A better approach would be to trigger alerts if you could predict your SLO was going to be breached.

Burn rate

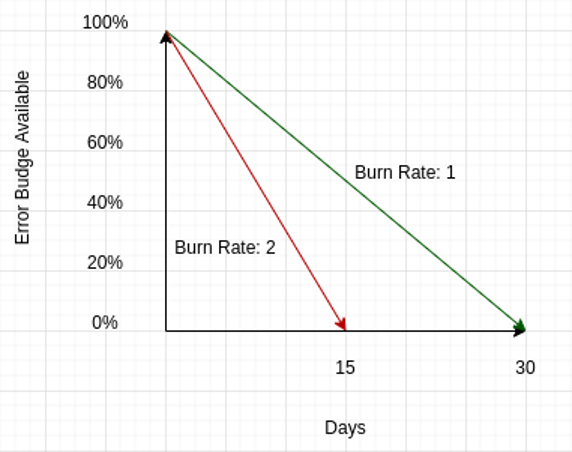

Burn rate indicates the speed at which your error budget is being consumed, relative to the SLO. Using the previous example, if you have an average error rate of 5% for the 30-day period, all your error budget will be consumed, corresponding to a burn rate of 1. If you were to have a burn rate of 2, all available error budget would be consumed in half the time window (15days).

By configuring alerts based on burn rate, you can be sure a significant portion of your error budget has already been spent and you need to look into it. But you can go one step further.

You can extend the concept of burn rate to consider multiple burn rates and multiple windows. Instead of only looking at all your window you could, for example, look at the last 2h and 24h and consider these as your fast and slow burn rates. These alerts would allow you to catch sudden and large changes in error budget consumption. You could decide, for example, that a burn rate of 10 in the last 2h would require your immediate attention.

When these are triggered you can be sure actions need to be performed. Maybe engineers need to jump on a conference call and write a postmortem document. Or maybe they need to stop feature work and focus on reliability features for the next sprint. But what exactly?

Error Budget Policies

When faced with error budget violations, you need to know what to do. Error budget policies determine the thresholds and actions to be taken to ensure error budget consumption is tackled. For each SLO a policy should be in practice and revisited regularly.

A policy can be as simple as “If error budget is depleted, fix your reliability issues” but its real value manifests itself when policies are carefully thought out and crafted. Policies should have an overview of the service they refer to, its goals and non-goals, the policy itself, exceptions to the policy, and any additional background information. An example can be seen in The Site Reliability Workbook. As an example, a simple policy could be something like:

- If a single event consumes 25% or more of the error budget, a postmortem must be conducted, which must include a P0 action that addresses the reliability that caused it

- If 75% of the error budget has been consumed, 50% of engineers must focus on reliability work

- If the SLO has been breached, feature work must stop and all engineers must focus on restoring it. Only P0 issues and security fixes can be released until the service is back within SLO

Despite its simplicity, this policy defines clear thresholds and actions that must be taken. It’s imperative that SLO breaches have consequences for them to be taken seriously. If not, it will be difficult to prioritize reliability work.

Conclusion

SLI, SLO, and Error Budget provide the foundations needed to measure, assess and prioritize reliability in the eyes of users. They create a framework and a language that allow different teams to understand and talk about reliability.

Before user dissatisfaction reaches critical levels, you want to take proper action and ensure operational excellence. Alerting on SLO serves this purpose and concepts like alert on error budget burned and burn rate will allow you to be aware of issues way before your SLO has been breached.

When these alerts are triggered, error budget policies will describe what actions need to be performed. They ensure that, when certain criteria are met, the necessary actions are consistently followed.